As Impala and the Cloudera have helped the community over the past few years, nothing better than the Aaron to talk about it with Hadoop, let’s see how it works with HDFS, and how the latter has been modified to meet new requirements.

When people talk about Hadoop, a part of this is tied to HDFS (Hadoop Distributed File System).

This is used only as a distributed file system; it was unique and exclusively for working with large data blocks that need to be fast for good performance with MapReduce.

Each HDFS cluster is composed of clusters with multiple nodes, which store metadata and data.

There are block maps and file system metadata that organize the entire access flow.

The Impala is a general-purpose engine for query processing in HQL (Hive Query Language), it works for both analytical processing and real-time execution.

It runs distributed across clusters, and we can submit queries with ODBC/JDBC.

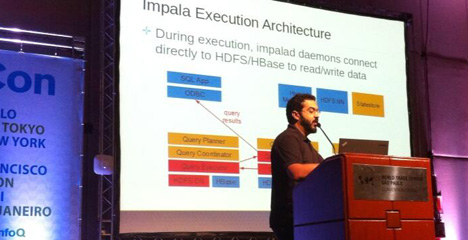

When we deploy Impala in our environment, what’s behind the scenes are two daemons: impalad and statestored.

The impalad handles all client requests; the statestored deals with all necessary states for the operation of the daemons.

Each request to Impala is made via odbc/jdbc_; these requests are paused by means of execution plans.

First, Impala is concerned with low-latency queries and for this, it doesn’t exclude distributed scenarios, such as co-located replicas blocked, by local reading versus network speed.

Impala added a feature that specifies where a data set should know its replicas.

Currently, disk throughput isn’t as fast as we can access them to process files in real-time, with the weight of files, and for this Impala has optimized HDFS to read directly from main memory.

By these and other improvements Impala puts itself 5-10x faster than Hive for simple queries and 20-50x for complex queries with joins.