Why should we worry?

That’s how it started with the keynote from Todd Lipcon…

Perhaps because over the last few years, companies have seen an explosion in volume, variety, and speed of data that they need to handle every day.

This has been a blessing and a curse.

At the same time that the data explosion allowed us to generate new types of applications and highly intelligent insights, developers found that the previous generation of data management tools and frameworks collapsed when trying to work with terabytes or petabytes of often poorly structured data.

When Todd was a child, he found a program that pretended to be a person answering questions, and after asking some questions, he discovered that the program was very stupid.

Presenting it to his father, he was challenged, tried to improve it but couldn’t go too far with his age.

Twenty years later, he saw the watson responding to all these questions… the difference? Big data!!

Going back a bit in time, he started showing the first steps of a path that passed through recursive and sluggish indexing and no doubt about proprietary equipment and software from large companies.

This started to change with Google; they created their own storage and infrastructure processing.

The MapReduce emerged based on the premise of KISS.

These technologies are still fully operational until today, they never sold them but wrote several papers that gave rise to various laws that are out there.

The Hadoop emerged later with Doug Cutting, which had similar problems to those Google had, reading Google’s papers found a good strategy for working with large files in a distributed manner.

Hadoop is a scalable data storage and processing system that can work on any machine.

The machines within the Hadoop scheme are known as nodes, and processing these distributed data on nodes is completely transparent, as if there were no real distinction between local and remote.

One of the fundamental parts of Hadoop is the HDFS, which allows us to work with large files by dividing them into blocks of maximum size and replicating them so that they can allow redundancy, to avoid information loss or interruption in processing in case of failures.

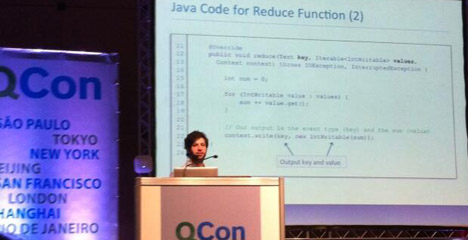

Another fundamental part is MapReduce, a programming model that has two main parts: instructions for performing transformations, parsing, or filtering data, which always runs before and always returns results; and instructions for summarizing data.

These instructions bring great simplicity to daily work processing one record at a time, without the need to perform explicit I/O and it is well scalable.

At this moment, Todd presented a MapReduce example on a simple domain, counting words in the children’s song below, going into details on how we could conquer speed for this model by implementing Hadoop’s MapReduce. > Soft kitty, > Warm kitty, > Little ball of fur. > Happy kitty, > Sleepy kitty, > Purr Purr Purr Some frameworks were mentioned:

Spark: is a framework that works with MapReduce, supports multiple languages, and has an interactive shell.

Comparing codes made in Hadoop and Spark, we have a reduction of 10x in the number of lines required.

The gains also go in terms of processing speed;

Sqoop: will facilitate efficient exchange of large amounts of data between Apache Hadoop and structured data storage, such as relational databases;

Flume: allows importing data to the HDFS while it is generated on any number of machines.

][1]

][1]